Deep Tarot Cards

Idea & Concept

I'm neither a superstitious nor especially spiritually inclined, but still I find myself strangely attracted to Tarot cards. I'm not interested in their supposed power to tell one's fate, however, but the fact that they are ripe with culturally charged symbols, each drenched in complexity and spiritual meaning. As a designer, trained in the craft of navigating and leveraging the world of visual symbols, I am fascinated by that.

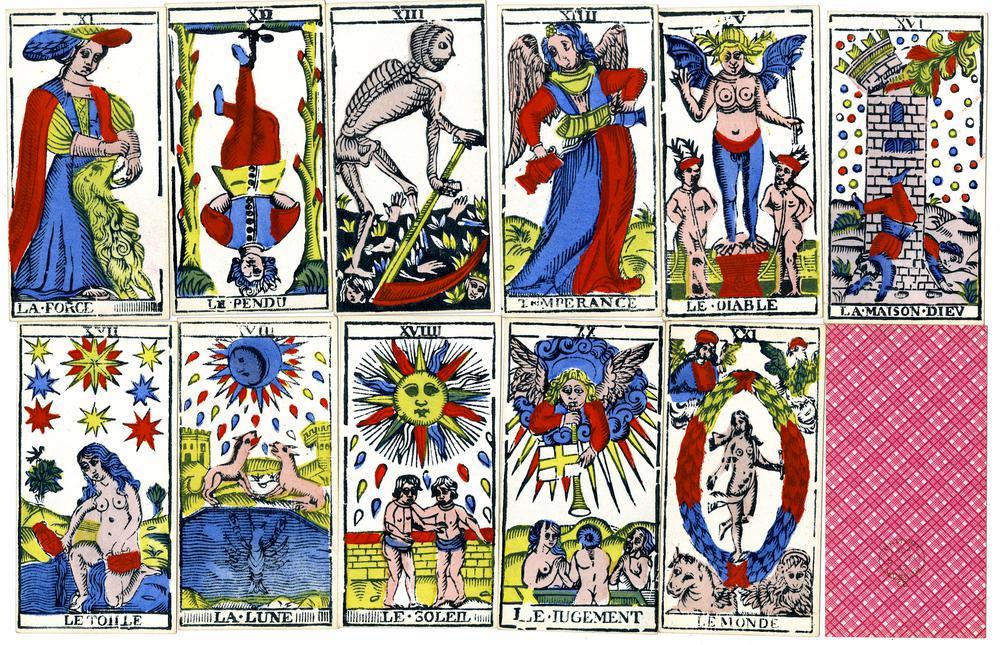

Selection of trumps from the Tarot de Marseilles, a typical 18th-century pack (© The Trustees of the British Museum, released as CC BY-NC-SA 4.0)

By virtue of the abundance of symbolism and the possible associations it provokes in the viewer, Tarot can be understood as a procedural algorithm generating an endless stream of narratives:

"Tarot, then, is a procedural algorithm: cards are drawn and placed into position according to the system the reader has chosen. The results are then read as a whole, both meanings of individual cards and the interplay of patterns coalescing to form the final impression of the reading."

– Cat Manning, 2019, “Tarot as Procedural Storytelling” in “Procedural Storytelling in Game Design”

So, what if we would rethink the motives and symbols shown on each card? Better yet, what if we let an image generation network reinterpret them? A ML-network (more precisely a GAN) can use the card title (e.g. 'The Magician') as a prompt to generate an image associated with it. Consequentially, the GAN would offer its own interpretation of what the card title means in the eyes of the algorithm. In other words, this means offering its very own set of associations with the meaning of the card. Ultimately, this provokes new creative connections in us as the observer, leading to a recursion of interpretations. Venturing into this recursive loop of creative associations was the goal of this project.

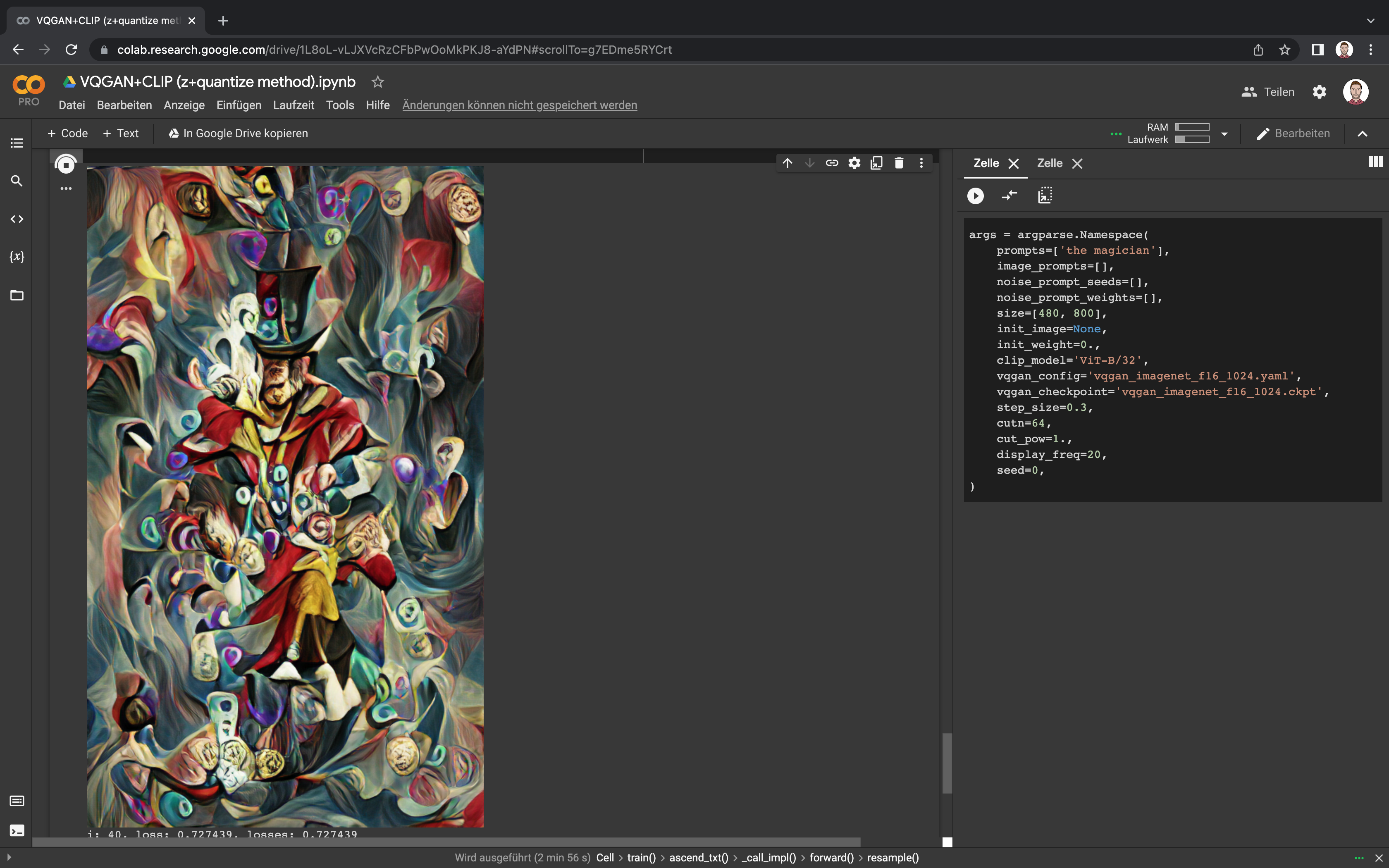

Working with VQGAN + CLIP

For the technical foundation, I used VQGAN+CLIP by Katherine Crowson (GitHub, Twitter), executed on Google Colab. The original BigGAN+CLIP method was by advadnoun. Tweaking settings like the initial weights, the step size, the iteration count and the starting seed, I slowly worked my way to settings which created desirable outcomes, both in terms of aesthetics and variety of associations found. The final result is based on the idea of 'cutting off' the image generation early, in order to preserve and amplify a dream-like ambivalence of the visuals, before the GAN iterates to something too concrete and specific.

Tweaking step settings within the same seed.

Screenshot showing the generation process in Google Colab.

The set of cards after finishing the card layout in Figma.

Presenting the proof-of-concept

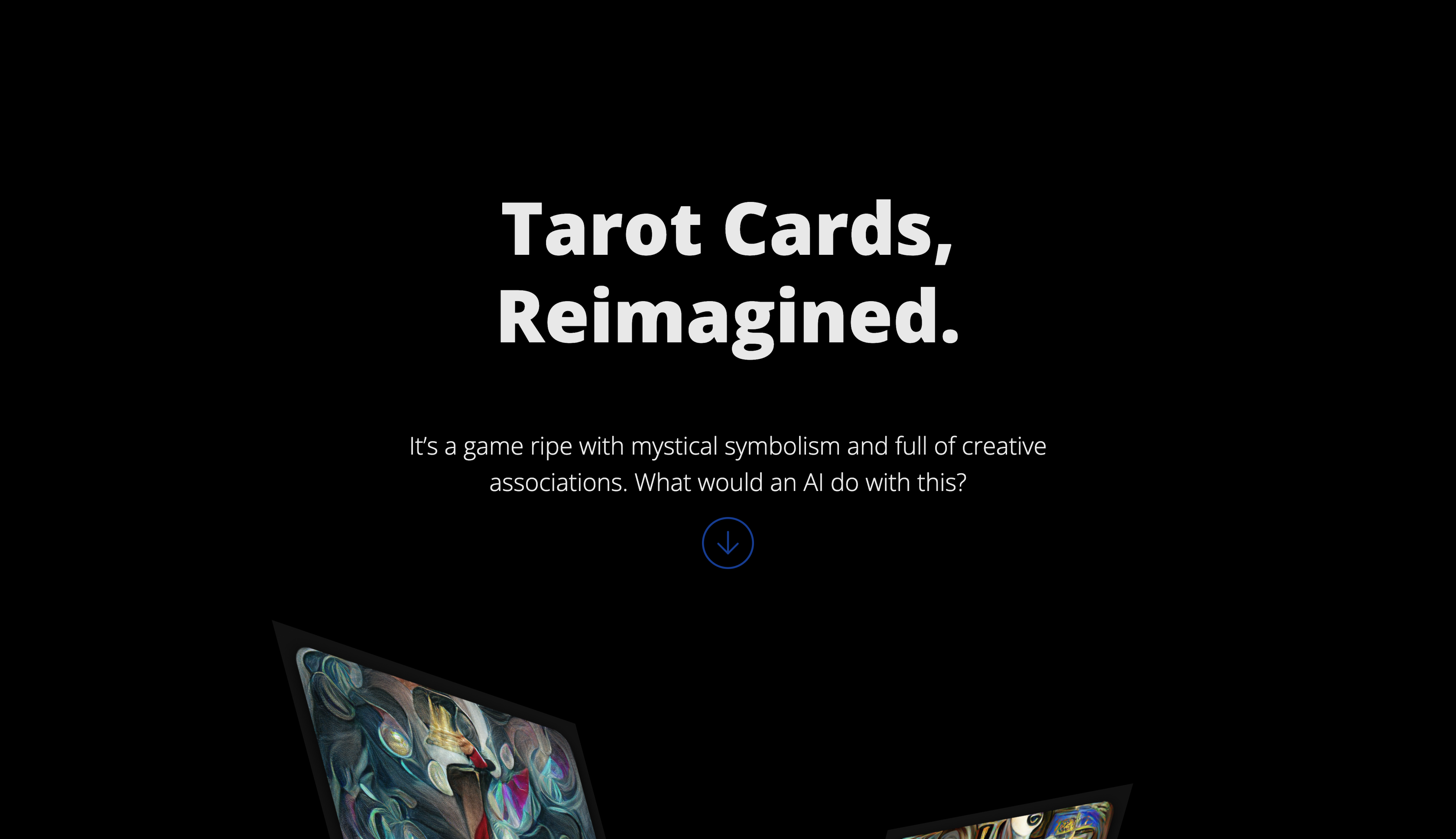

After deeming the URL deep-tarot.cards a fitting stage, the project was ready to be showcased. The design of the website emphasizes the mystical but playful character of the idea, while paying homage to the physicality of a real set of Tarot cards.

Visit the Website

First viewport of the website.

Next Steps

As for the future of the project, it becomes clear that it offers a lot of creative potential to be explored. The first step is, of course, to complete the whole set of 78(!) cards. A physical edition is the first thought that springs to mind, but there are many intriguing ways of digital distribution as well.

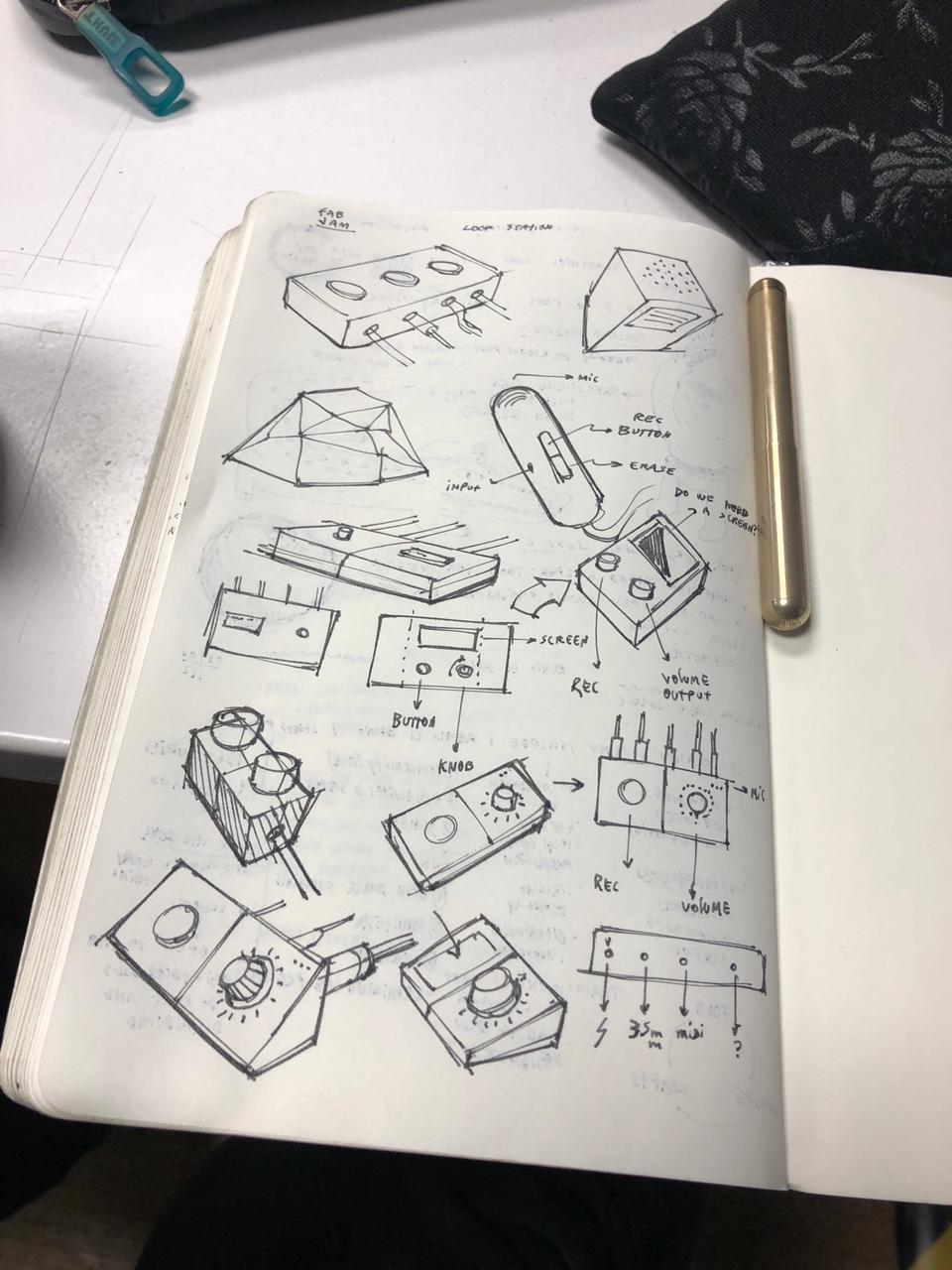

Open Jam Box with Joaquin

With Joaquin the idea quickly materialized to build on last term's intervention to further investigate the musical side. For that we are planning to build a modular, open-source loop station called Open Jam Box. The steps so far were centered around defining the concept, mapping out inspiration and stakeholders, exploring the technical feasibility of different technical setups and sketching hardware designs, both on paper and as 3D models for later digital fabrication. This project will accompany us throughout most of FabAcademy, as there are a multitude of connection points between the both tracks.

Mapping out inspiration and stakeholders on Miro (left), building a technical prototype (right).

Sketches by Joaquin, showing different explorations into hardware form factors and modularity.

Artificial Constellations with Tatjana

We had been talking about an A/V collaboration for weeks, when Tatjana presented her initial outline of Artificial Constellations in Design Studio 03. I was intrigued by the concept, alongside its potential in audiovisual experimentation and creative storytelling. Here's the idea in her own words.

"I got this idea when I was watching a live performance on YouTube, reminiscing of a time when concerts were possible. I came across a comment saying that they would love to listen to the artist perform while looking up at the stars, because it would be magical. Then, I looked out of my window & couldn't see any stars, partly due to the cloudy sky, partly because of the pollution. I thought to myself -What if we couldn't see the stars anymore?

I got a vision of a possible Design Intervention: An experience where you look at a virtual sky, projected by a machine. The stars would move around, changing shape, position & color according to the music being played, until they slowly dimmed out & disappeared. I thought it could be interesting to produce an Al-made ambient song, which would contribute to the title- Artificial stars.The project would ideally make the participants reflect on the importance of dear skies, and how city smog and pollution could potentially erase them.”

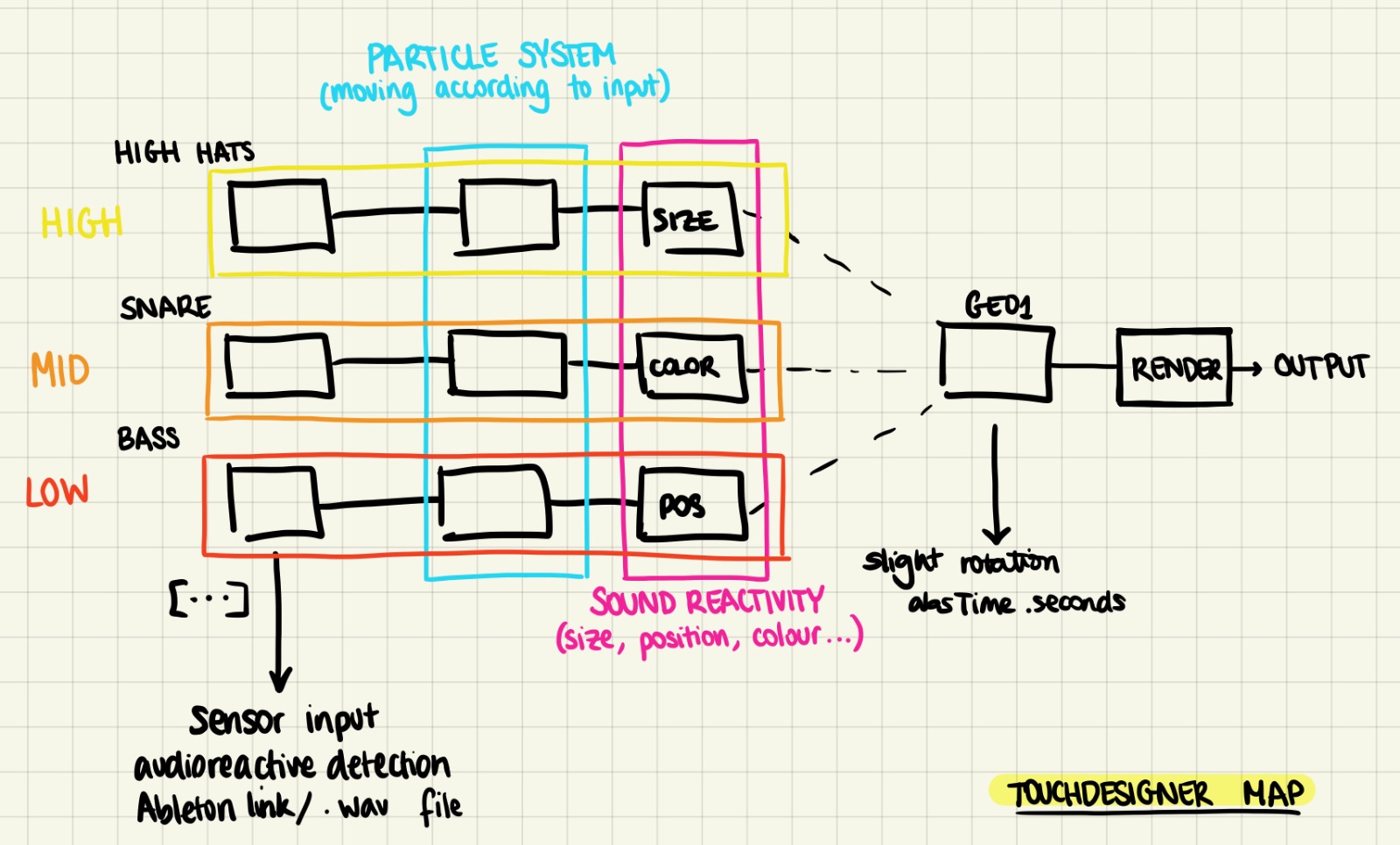

Subsequently I produced a four-minute track in Ableton Live to serve as a basis for creative experimentation. The melodies were largely generated by a ML-network using the Magenta plug-in (based on Tensorflow), while arrangement and effects were done by me. Through the sound design, I was trying to capture the emotional narrative of a clear serene night that slowly drifts into something more sinister and unsettling as the stars start to move around in the animation. After sending this rough draft over to Tatjana, she used it as a basis for building a proof-of-concept in Touchdesigner, separating high, middle and low frequencies to map it to different properties of instanced particles to be manipulated. More on the details of the concept and technicalities below.

Sketch by Tatjana showing the generative visuals concept.

Sound design in Ableton using Magenta plug-ins.